After being waylaid a bit by stomach bugs, I got a lot more hardware stuff working over the last two days. I got my ultrasonic range finder board wired up to the main microcontroller. It figures out distance using the method which bats use – emit a chirp, and then listen for the echo. In terms of coding, this means I’ve got external interrupts and 16-bit timer stuff working pretty well. I’ve been putting all of the code into a class “MyLibrary” … a bundle of code I hope to reuse in the future. Anyway, on with the pretty photos …

This is the ultrasonic range-finder board, with the emitter/listener pair.

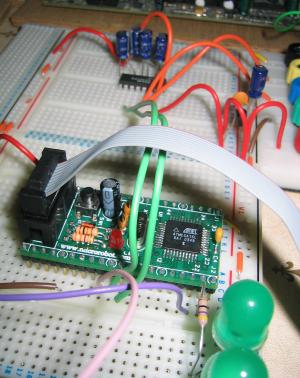

This is my main microcontroller circuit. The Atmega16L is the big chip in the foreground, with 8Mhz timer crystal, reset circuitry and in-circuit programming connector. A couple of super-bright green LED’s are there to increase the blinkenlight coefficient. In the background, a MAX232 uses a capacitor charge-pump to convert the voltage levels so that the Atmega chip can talk to the serial port on my PC. And at the back-right, there’s a simple 5v power supply with filtering caps.

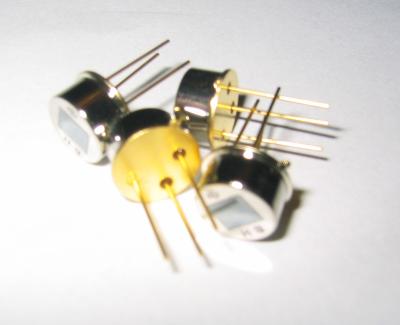

There are coming up next. They’re infrared thermopiles, which detect IR radiation and are a simple and effective way of detecting where the horizon is. More importantly, they’re very shiny. 🙂